Research philosophy

Our work aims to do two things: Mitigate/disrupt/eliminate/slow down harms caused by AI technology, and cultivate spaces to accelerate imagination and creation of new technologies and tools to build a better future.

Projects

We use quantitative and qualitative methodologies to equip historically marginalized groups with data to advocate for change. See below for some of our projects.

Impacts of spatial apartheid

Analyzing the impacts of South African apartheid using computer vision techniques and satellite imagery. Read our NeurIPS paper and this MIT Tech Review article, and check out our dataset and visualizations.

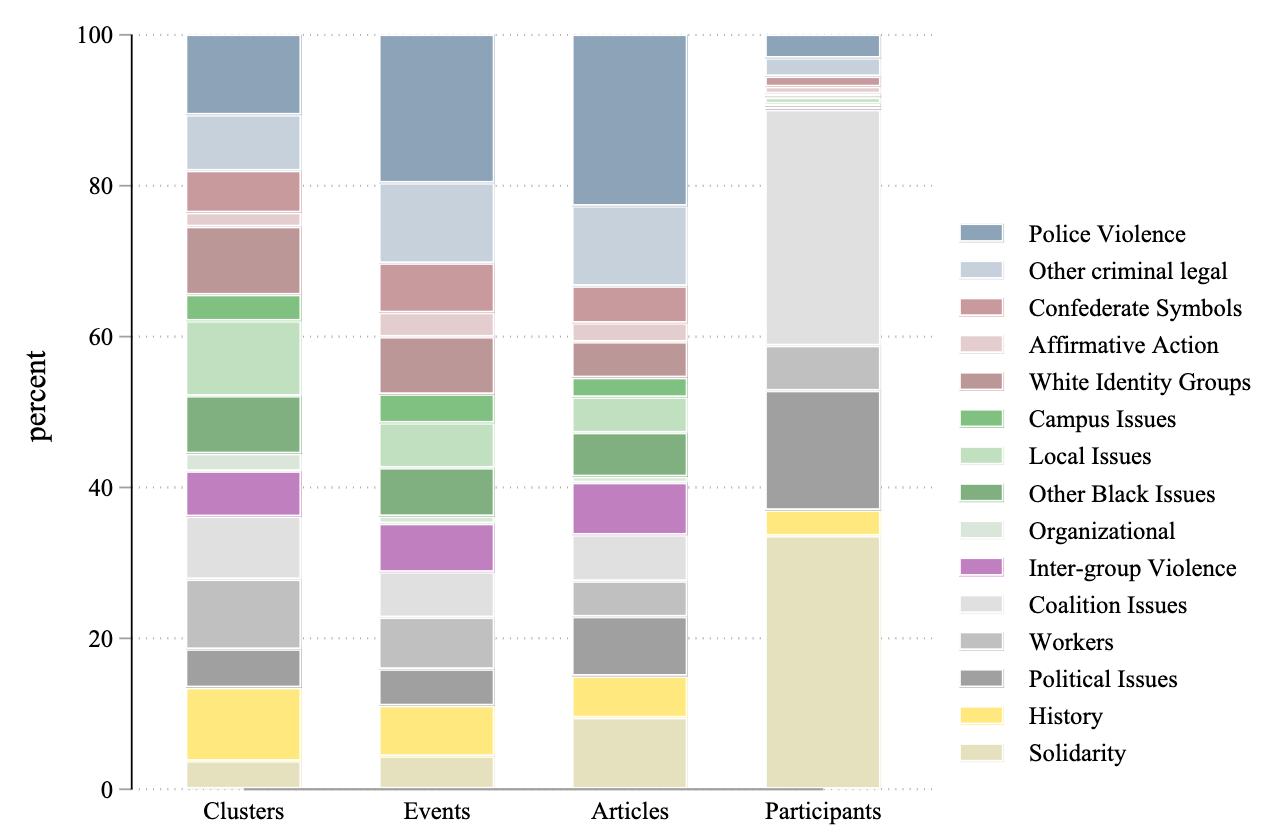

Anti-racist protests

Analyzing the history of Black protests in the US and Canada using machine learning methods. Read our papers on Sociological Science and Mobilization to learn more.

Social media harms

Creating natural language processing tools, and using qualitative methods to analyze the impacts of social media platforms on neglected countries and languages (more soon). Watch our short documentary on the topic and go to dair-institute.org/tigray-genocide for our research findings.

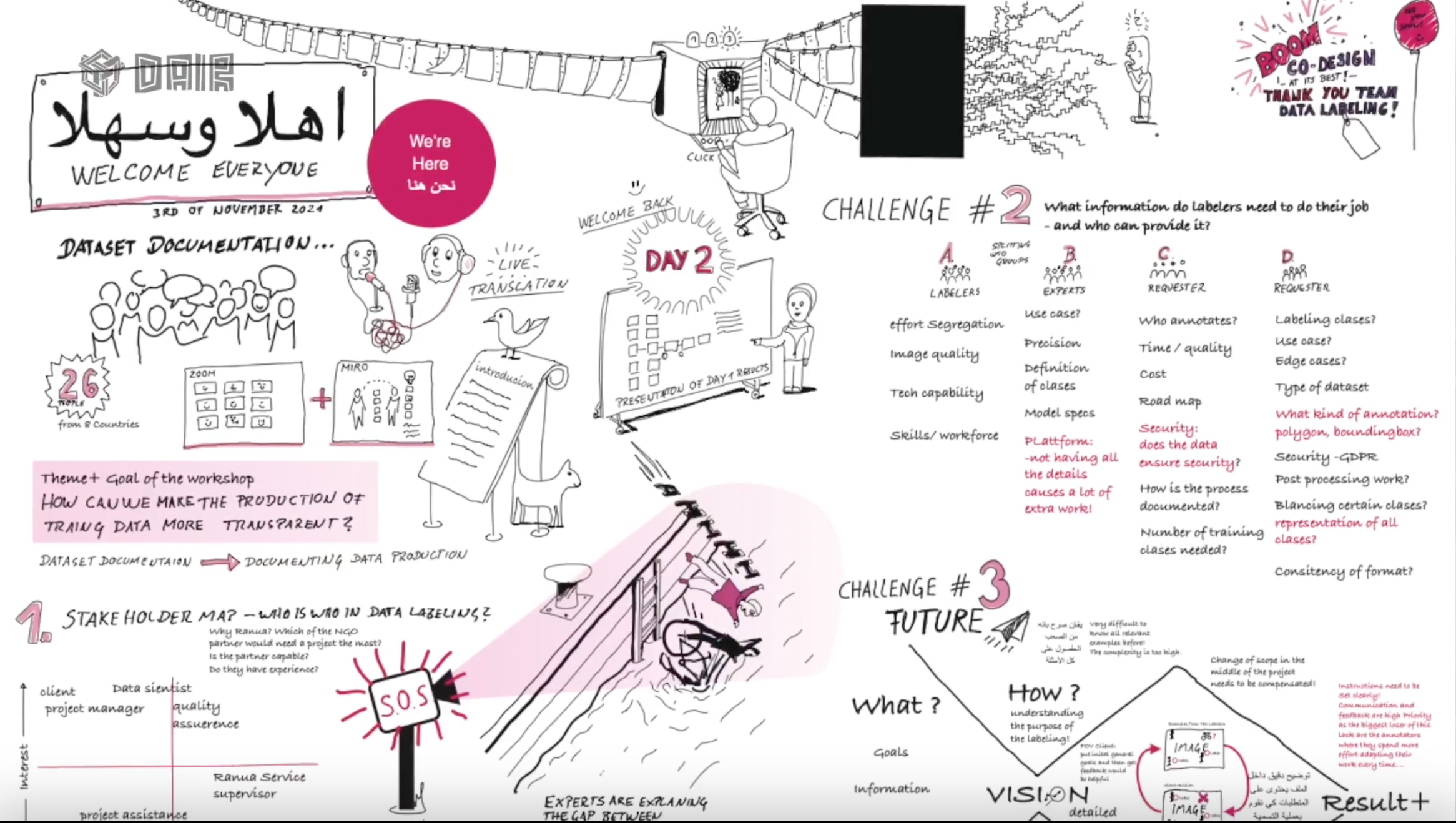

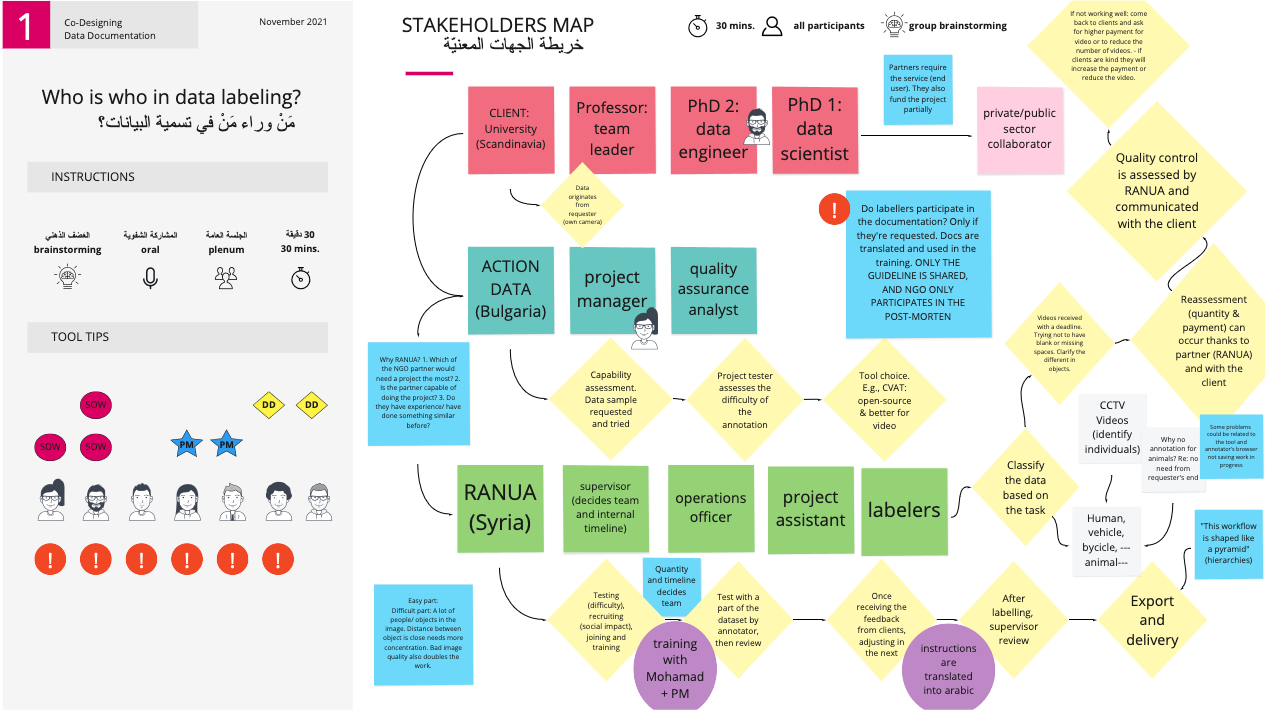

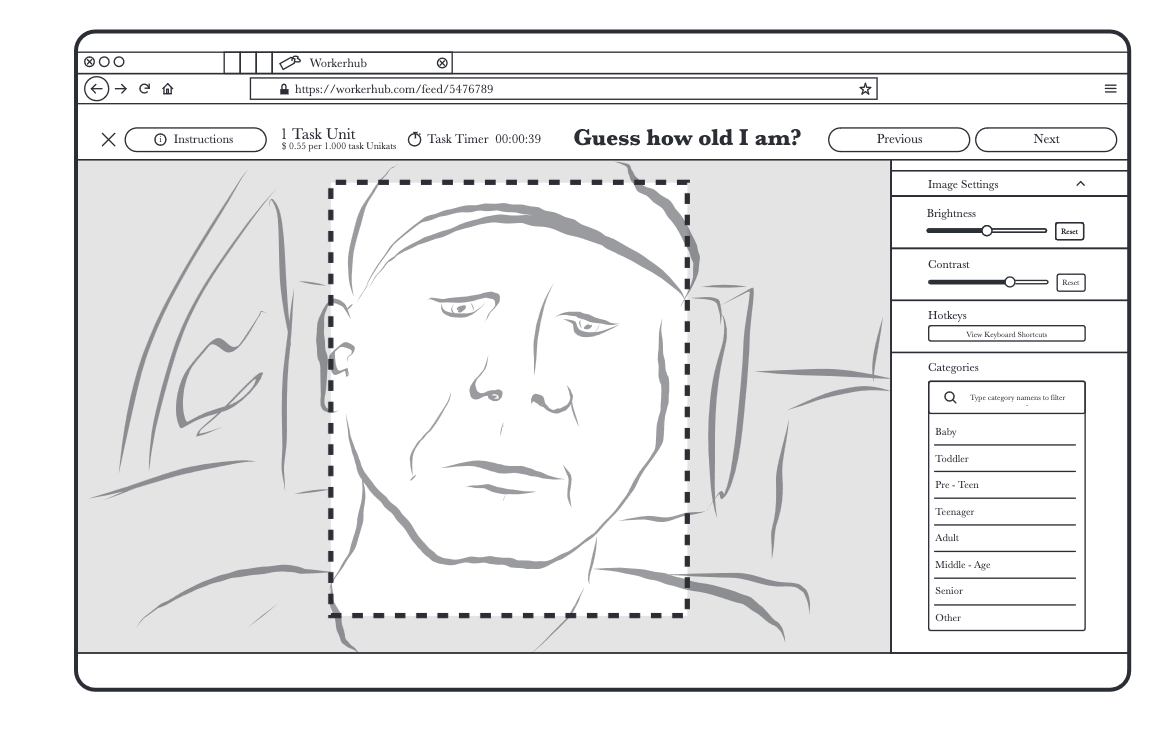

Data workers' inquiry

Centering the experiences of data workers in machine learning by using the Workers' Inquiry approach to uncover opportunities for collaborative knowledge exchange and collective organizing. Go to https://data-workers.org to find zines, reports, documentaries, podcasts and more created by 16 data workers from 4 continents.

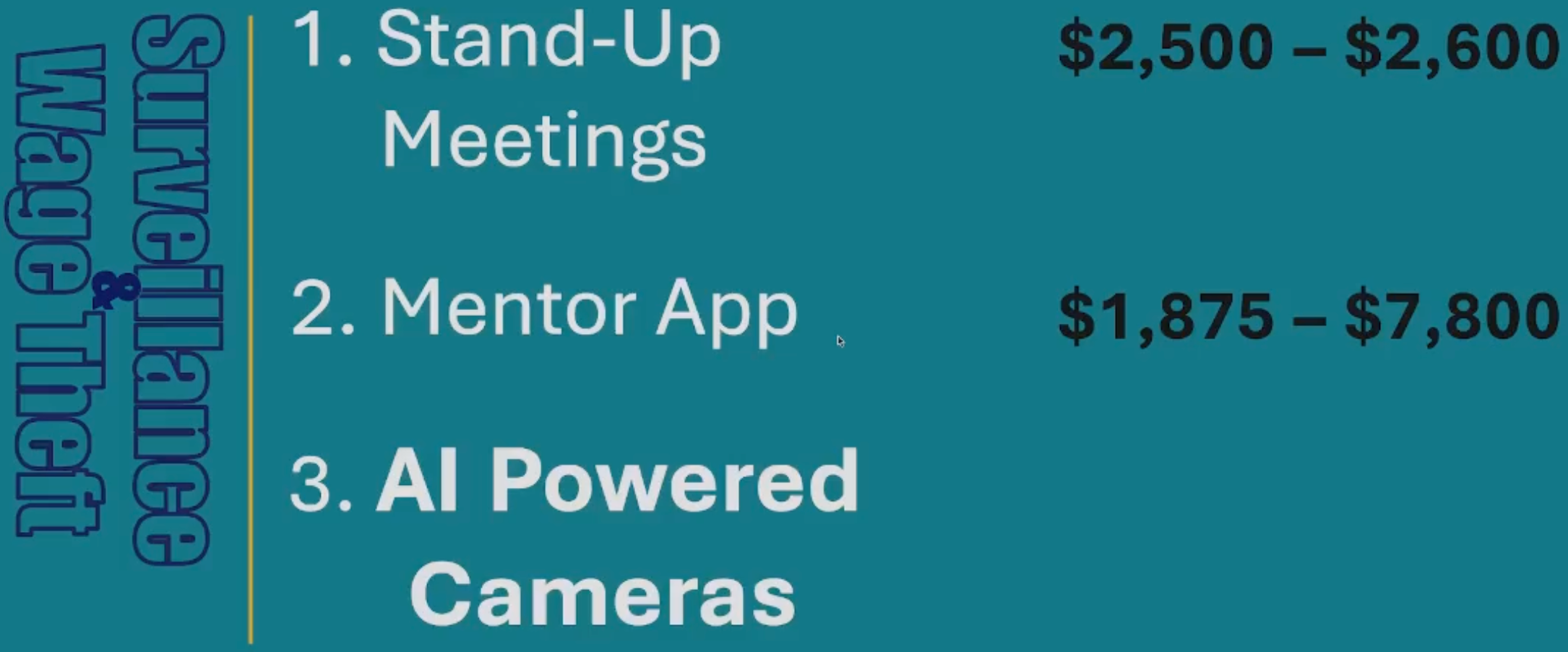

Wage theft calculator

Creating a wage-theft calculator to estimate how much workers are owed in lost wages that are stolen with the assistance of surveillance technologies. Watch Adrienne's talk.

We expose the real harms of AI systems while countering AI hype.

We build frameworks for non-exploitative community rooted research practice, including our projects below.

We imagine new technological futures reflective of everyone being able to see themselves centered in design, safety, joy, and beyond margins and borders.

Latest publications

We publish interdisciplinary work uncovering and mitigating the harms of current AI systems, and research, tools and frameworks for the technological future we should build instead.

Talks and podcasts

A curated set of educational talks and interviews on topics ranging from algorithmic bias to participatory research.